Building a SaaS Platform for a Support Center

TL;DR

I designed a continuous discovery process that fed insights from support center agents into our product roadmap on a weekly basis. The resulting new SaaS platform cut call times by two minutes, increased support center capacity by 8%, and dramatically reduced staff training time.

Research type: Continuous Discovery

UX skills: Concept Testing, Contextual Inquiry, Diary Study, Focus Group, Journey Mapping, Personas, Usability Testing

My role: UX Research Lead

Cross-functional team: UX Research, UX/UI Design, Product Management, Engineering, Support Center Operations, Service Design

Timeline: Nine months for MVP build

Context

A national nonprofit that connects eligible people to government programs was using proprietary support center software to screen and apply people for public benefits.

The software was outdated, inefficient, and not user-friendly, leading to expensive staff training and time-consuming workarounds. The team decided to build new software that would be efficient, user-friendly, and scalable. I wanted the users, the support center agents, to provide substantial input into the development process to ensure the software met their needs. With this in mind, I decided on a continuous discovery approach.

Research Process: Continuous Discovery

RECRUITMENT

The support center was under-resourced and agents had strict schedules, with restricted time off the phones. Scheduling ad hoc research sessions with agents proved very difficult.

I developed a continuous discovery process for the duration of the build, with two group sessions dedicated each week to research and testing with five rotating agents from different campaigns.

With this format, operations could efficiently resource plan across campaigns ahead of time. Cross-functional partners (PMs, devs, designers) were invited as “optional” to these sessions and we held debrief sessions about key insights every week.

RESEARCH GOALS

Foundational/discovery research: Understand support center agents’ experiences with the current software and map their journey highlighting pain points and opportunities.

METHODS: Interviews, Focus Groups, Contextual Inquiry, Diary Study (conducted outside of weekly sessions)

Evaluative research: Test new software designs iteratively with support center agents throughout the build.

METHODS: Concept Testing, Usability Testing

Analysis & Synthesis

I used thematic analysis/affinity mapping throughout the duration of the project to synthesize and analyze the large amounts of qualitative data being collected through the various research methods.

Outputs

USER JOURNEY

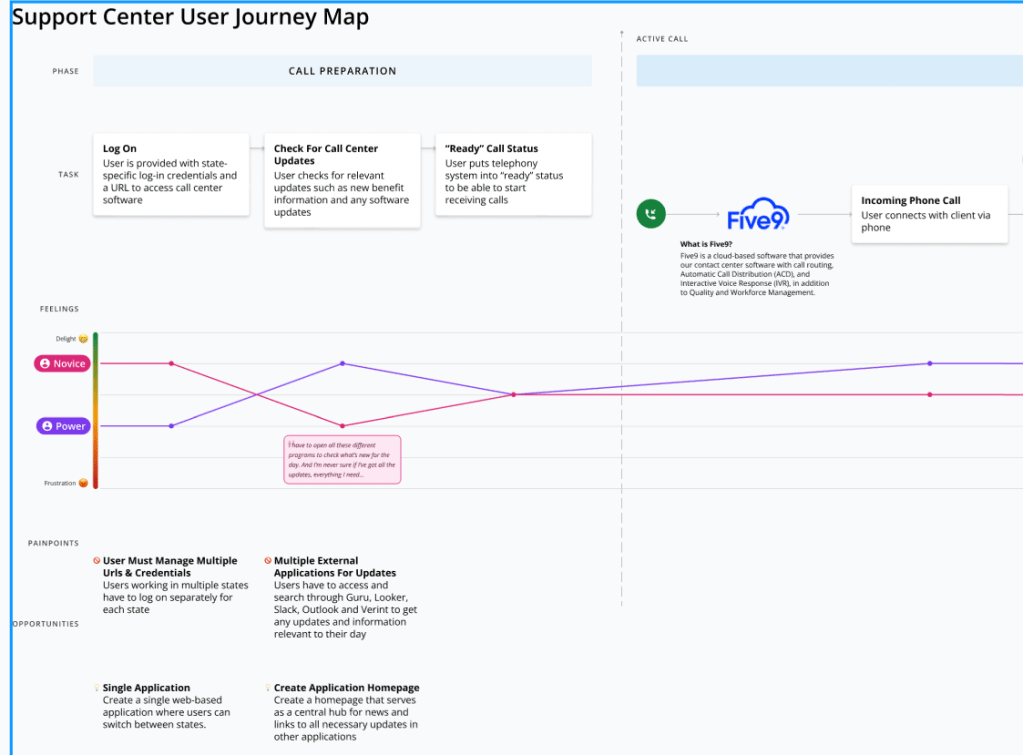

I partnered with our UX Designer to create a user journey map through the current software, using the insights from the discovery research. We mapped support center agents’ emotional journey, pain points, and opportunities to help guide the product roadmap.

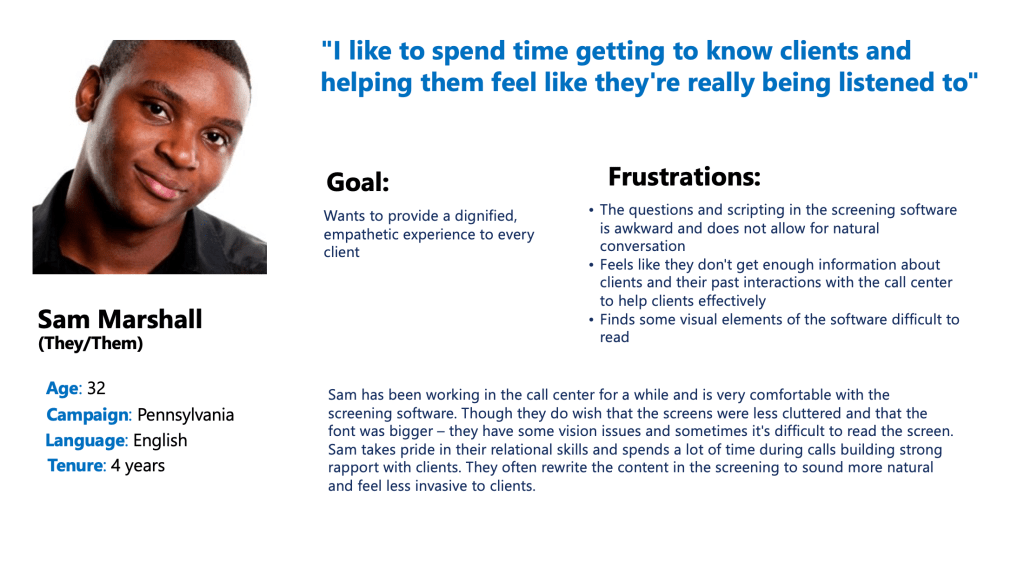

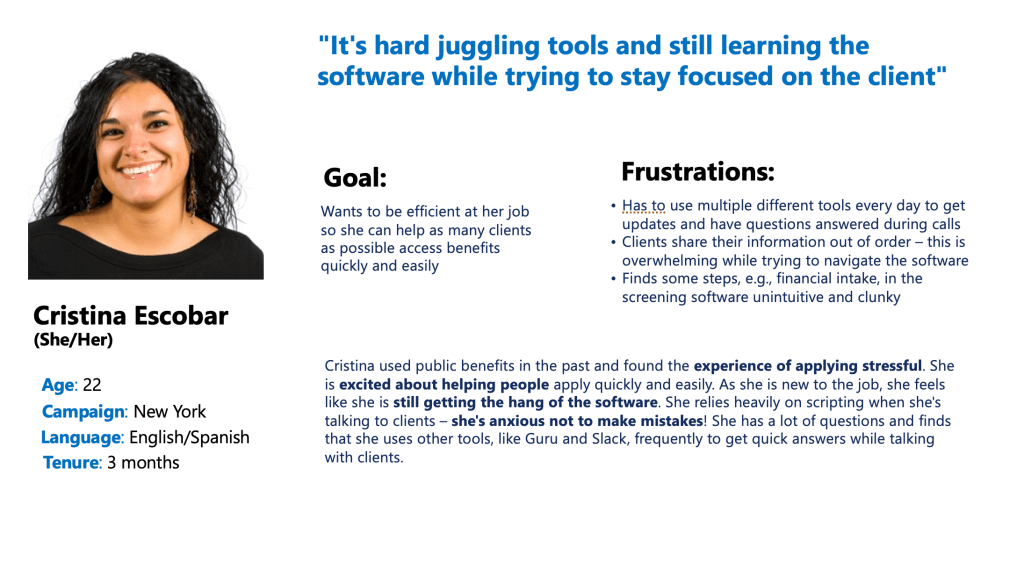

PERSONAS

I ran a series of workshops with our cross-functional team and stakeholders to create personas based on the research insights. Involving the whole team and stakeholders helped socialize the personas as useful artifacts throughout the build process.

INSIGHTS & ITERATIONS

Every week, we held a debrief discussing the insights from the user sessions. The insights guided feature creation and design iterations, from concept to wireframe to prototype, week by week, as shown below.

INSIGHT

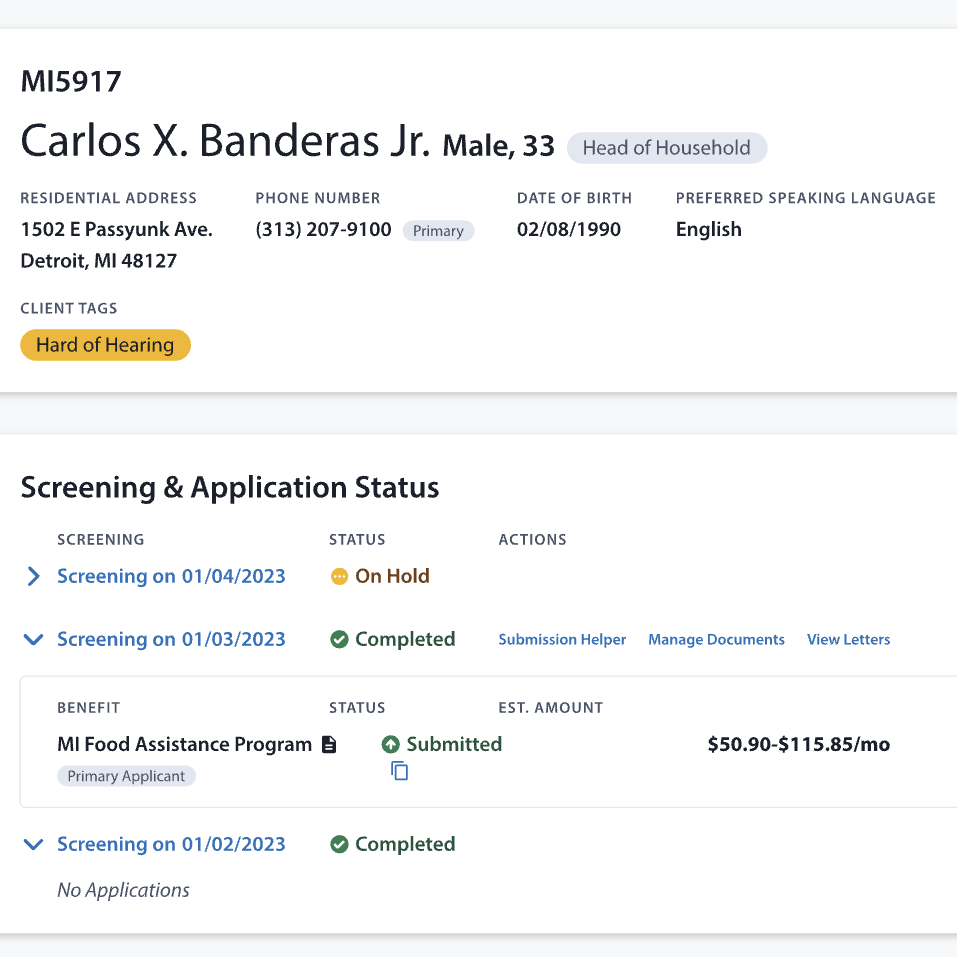

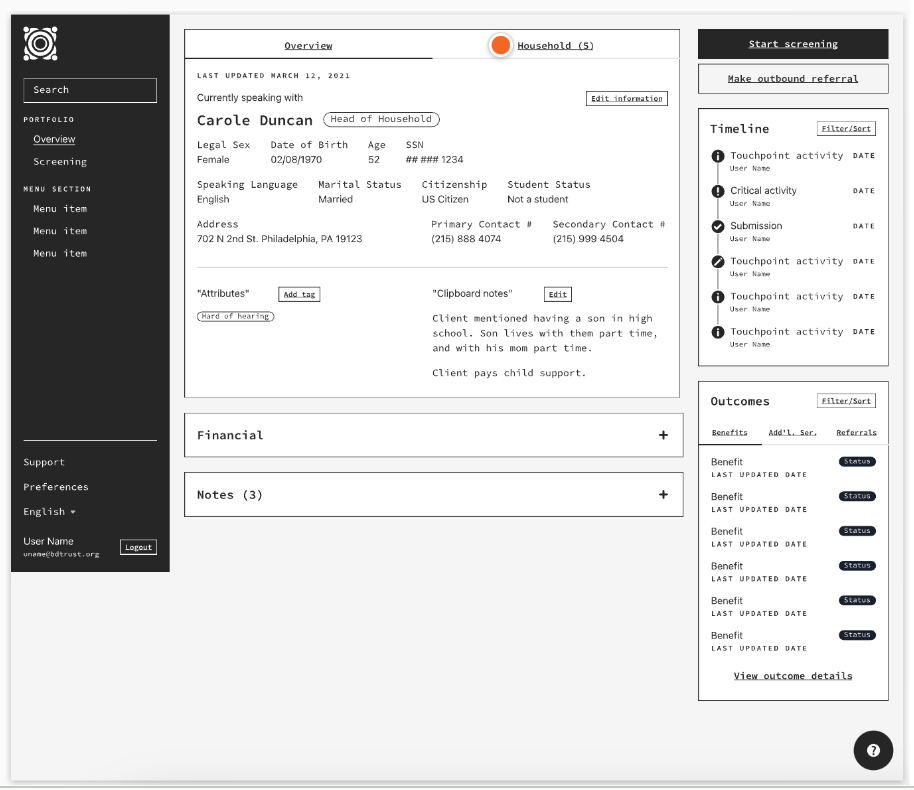

Agents do not feel well-prepared at the start of the call. They want access to more client information as well as a clear, contextual interaction history.

RECOMMENDATION

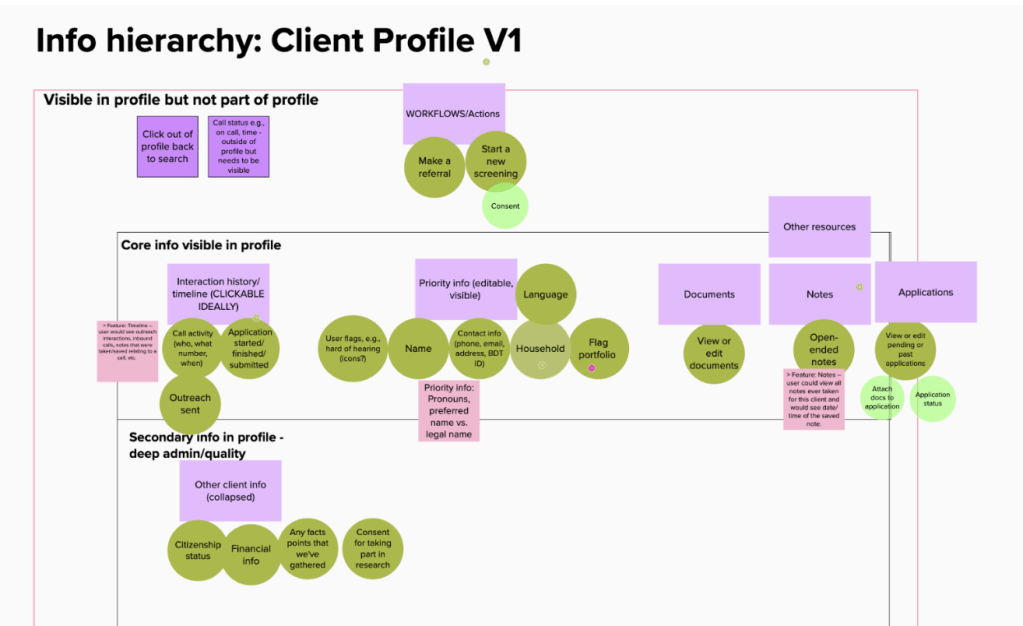

As a cross-functional team, we decided to create a client profile feature containing important information for agents.

Design Iterations

Impact

After the new software was installed, the average call time decreased by two minutes – that’s 13 thousand calls a year = 570 applications = 1 whole extra month of benefit applications!

The first support center agent to screen a client using the new software described the experience: “Like butter, so smooth!”

Reflections & Next Steps

The continuous discovery process was overall a success for this project and helped the team get into a solid cadence of research, testing, and design iterations.

It was, however, difficult to analyze the data and provide useful insights to the rest of the team every couple of days for 9 months. Doing this again, I would allow a little more time for data synthesis/analysis (e.g., do design sprints in a cadence ahead of dev sprints).

After launch, I gathered feedback from agents using the new software through a dedicated Slack feedback channel, watched call recordings of agents using the new software, and ran 1-on-1 feedback sessions. I also partnered with the data analytics team to gather metrics, such as number of errors (noted during quality control call monitoring), length of calls, and number of applications submitted. Using all this data, I partnered with product to add bug tickets, enhancements, and new feature requests to the backlog.